Back in May (2023) I stumbled my way into a a full blown interest in what people are erroneously calling “artificial intelligence”. I’d played with Midjourney back when it first gained traction, and even paid for a month of access, but my interest in it quickly faded. It wasn’t until I learned there was a growing community of people dedicating themselves to providing free access to open source text-to-image models via a system called the AI Horde.

After joining their Discord server, I saw that there were two existing apps for iOS: AiPainter, from a Chinese developer and built in Unity so it could be cross platform; and Stable Horde, which no longer works and has been abandoned by its developer, built in Flutter. Aside from that one working app option, the main way people on iOS would use the Horde would be through ArtBot, the most popular web client for the horde; or they could use it through Discord bots similar to Midjourney, which works for some people.

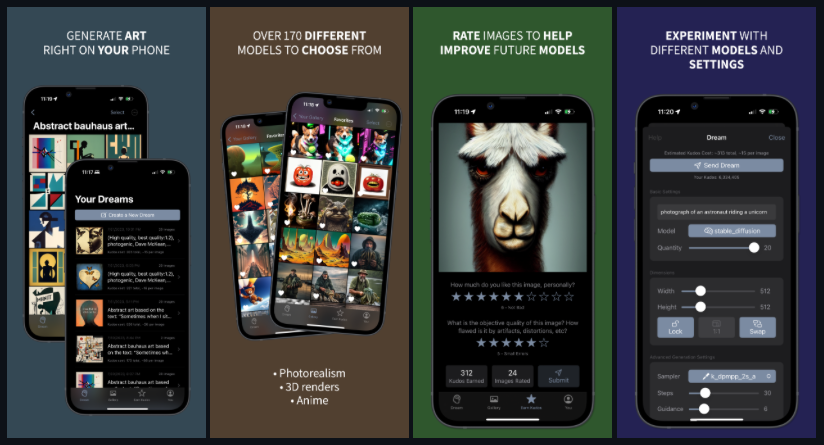

You can easily see that there was a big glaring hole that I could fill with a nicely designed, native app for Apple platforms. Similar to PiBar, the open source and anarchist-adjacent, ‘everything should be free’ leanings of the Horde community also deeply appealed to me. The icing on the cake was just that the Horde community itself seems pretty cool, and managed to get me more interested in generative art.

So, here we are, around two months later: Aislingeach is out on the App Store, in a relatively rough version 1.0. For free you can use the latest and greatest on TestFlight. Or, you know, just fork the repo and build it yourself!

This was a fun project for me because it forced me to finally use Collection Views in UIKit, which I’d manage to never find a need for before now. In this case, I needed essentially a Photos.app-like gallery experience, with a thumbnail view that, when tapped, leads into a detail view with side-to-side swiping. There are still some things that I am clearly doing wrong around cell reuse that I need to address, the symptom of which is that some images will flash in detail view when you favorite them.

I’d used CoreData in projects before, but this was the first project where I relied entirely on the compile time generated classes and did not create my own classes at all. It worked really well and I have no complaints about it, because I do not really need to build a bunch of logic directly into classes (seems like a bad paradigm anyway). I also had to use “Use external storage” for the image storage, and this works really well as well.

The most challenging thing about this project was just coming up with a way to manage the request queue and ensure that nothing was lost. When an app is suddenly backgrounded, it will just cut off any requests that are happening, so an image that was loading in the UI will error out and not appear, or an image downloading in the background won’t complete downloading.

So for the last example I ended up building a table in CoreData (why not, already using it) that I feed all the pending download URLs into, then once the file has been downloaded and the image is saved to the database, the pending download is removed from the table. Works great so far, but you can still end up in a scenario where a request ends up saying it’s downloading images but no downloads made it into the download table–so it’s not fully dialed in just yet.

This is really just the very beginning for this project. I intend to do my best to support all the AI Horde features, dial in a proper iPad interface, and get some sort of cloud syncing working (CloudKit maybe). We’re in the very early days of generative art still in the open source communities, so there’s no guarantees the way something works now will be the way it works a year from now, so I’m trying to keep an open mind and stay light on my toes in regard to what I support and how I support it. We’ll see how it goes!

- Aislingeach on the App Store: https://apps.apple.com/us/app/aislingeach/id6449862063

- Aislingeach on TestFlight: https://testflight.apple.com/join/Q6WyyEpS

- Aislingeach on GitHub: https://github.com/amiantos/aislingeach